2025-02-25

•Sam Reed

Off The Deep End

How does OpenAI's Deep Research Agent fit into the bigger picture?

#OpenAI’s Deep Research Agent

From an OpenAI announcement on February 2, 2025:

Introducing deep research

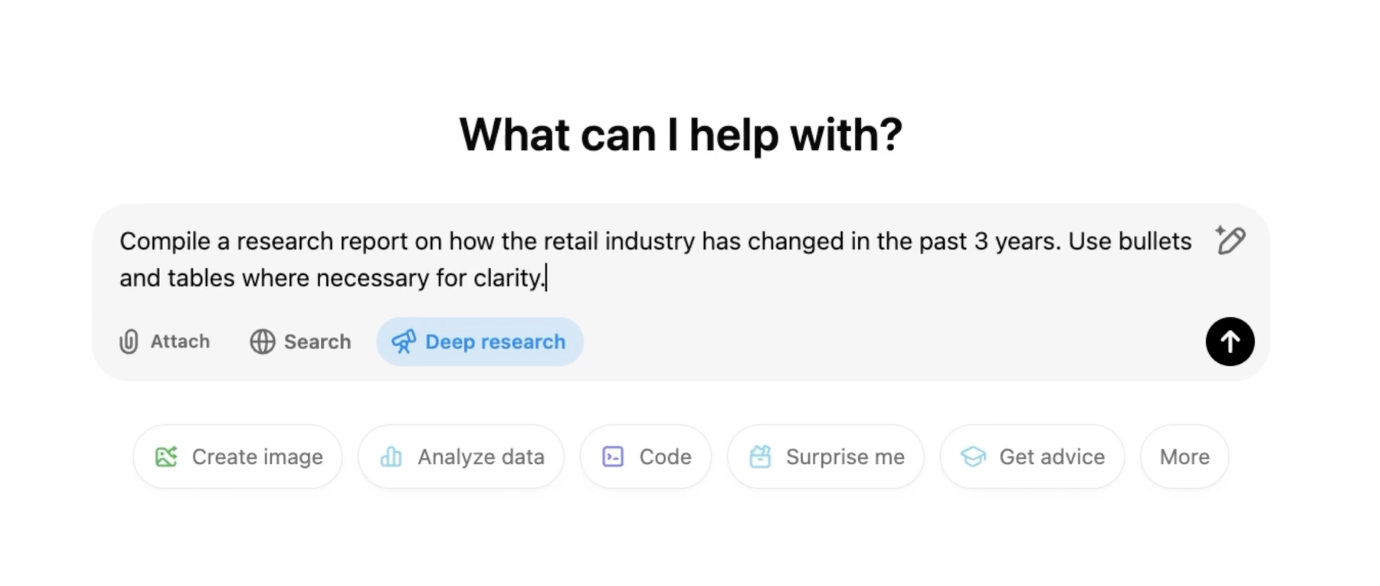

[Deep research is] An agent that uses reasoning to synthesize large amounts of online information and complete multi-step research tasks for you. Available to [ChatGPT] Pro users today…

Today we’re launching deep research in ChatGPT, a new agentic capability that conducts multi-step research on the internet for complex tasks. It accomplishes in tens of minutes what would take a human many hours.

Deep research is OpenAI’s next agent that can do work for you independently—you give it a prompt, and ChatGPT will find, analyze, and synthesize hundreds of online sources to create a comprehensive report at the level of a research analyst. Powered by a version of the upcoming OpenAI o3 model that’s optimized for web browsing and data analysis, it leverages reasoning to search, interpret, and analyze massive amounts of text, images, and PDFs on the internet, pivoting as needed in reaction to information it encounters.

The ability to synthesize knowledge is a prerequisite for creating new knowledge. For this reason, deep research marks a significant step toward our broader goal of developing AGI, which we have long envisioned as capable of producing novel scientific research.

It’s December of 2015. As evidenced by the positive critical reception of A24’s sci-fi thriller Ex Machina, anxiety over sentient AI has entered the cultural zeitgeist. You’ve caught glimpses of this “AI”—this mysterious blend of software, hardware and soul—quietly (menacingly, perhaps) lurking in America’s kitchen corners. That’s right, it’s 2015 and AI is here, it’s for sale, and its name is Alexa.

Right.

Though time has shown us that Alexa didn’t live up to its initial hype (Amazon still makes echo devices…don’t count ‘em out), another, much lesser-known event that took place in 2015, the founding of a nonprofit research lab called OpenAI, has certainly filled in the gap of technological overpromise and under-delivery.

That’s right, OpenAI was hard at work for quite some time before the explosive growth of ChatGPT in November 2022. Let’s take a quick look at the blog post that announced OpenAI to the world in 2015:

OpenAI is a non-profit artificial intelligence research company. Our goal is to advance digital intelligence in the way that is most likely to benefit humanity as a whole, unconstrained by a need to generate financial return.

…

Because of AI’s surprising history, it’s hard to predict when human-level AI might come within reach. When it does, it’ll be important to have a leading research institution which can prioritize a good outcome for all over its own self-interest.

We’re hoping to grow OpenAI into such an institution. As a non-profit, our aim is to build value for everyone rather than shareholders. Researchers will be strongly encouraged to publish their work, whether as papers, blog posts, or code, and our patents (if any) will be shared with the world. We’ll freely collaborate with others across many institutions and expect to work with companies to research and deploy new technologies.

OpenAI’s research director is Ilya Sutskever, one of the world experts in machine learning.

There’s been a lot of drama about how OpenAI has changed over the years. In case you’ve been talking to your Echo Dot under a rock this whole time, the short story is that OpenAI was founded (see above) as a nonprofit research lab with the goal of advancing AI instead of generating a financial return. As is noted in a Vox Article that covers this topic in detail, the company started to bump up against the inherent limitations of nonprofit capital raising early as 2019 and has been working since then to pull off the transition to an at least partially for-profit entity.

This nonprofit-to-for-profit transition has been messy, resulting in 1) the OpenAI board’s unsuccessful attempt at ousting Altman and 2) the resignation of many of the more academic, research-oriented team members from the company (compare Ilya’s new company’s website to OpenAI’s to get a sense of creative differences). Eventually we got to the OpenAI of today, which is a nonprofit that owns a for-profit that is trying to buy the nonprofit before Elon Musk does, and, most importantly for the rest of this week’s edition of Automato 🍅, builds things like “Deep Research” agents.

Time’s a Wastin’ I think one of the great questions of our present time, given the presumed significance of attaining advanced artificial intelligence, is the question of what OpenAI should actually be doing. The question is a little whacky given OpenAI’s seemingly inexhaustible access to funding (if they can iron out the aforementioned capital structure issues), but if you’re willing to assume that 1) OpenAI employs some of the most talented machine learning engineers in the world and 2) that maintaining AI supremacy has large implications for national security and economic prosperity, it follows that opportunity cost should be a significant decision factor in deciding which initiatives the company undertakes.

I’d like to analyze the Deep Research Agent within the context of opportunity cost.

Opportunity cost can arise from a number of factors for the typical business, with financial constraints being a base-level driver. CEOs, when figuring out how to navigate the competitive business landscape with limited financial capital at their disposal, must decide whether to hire, invest in new lines of business, fund marketing efforts, engage in M&A, pay out shareholders, and more. The effective deployment of human talent is obviously critical as well (among other things), but people need to get paid, so these decisions are either coupled with or come after the financial ones.

From the outside looking in, it feels like OpenAI has the opposite problem. To be clear, I’m not saying they don’t have any financial problems to fix—their commercial products aren’t revenue positive, and as discussed above, converting to a more fundraising-friendly structure hasn’t been easy—but there’s a difference between a huge problem and a huge nuisance, and investors continue to show that capital raising falls into the latter category for Altman & Co.

To me, OpenAI feels much more like a professional sports team than a typical business, in that their problems are driven by a lack of genuinely qualified human capital at their disposal (an ironic sentence for an AI company). This is magnified by the fact that the race to artificial general intelligence may indeed have only one winner.

With limited ability to find and deploy employees that are capable of making novel contributions to the filed of artificial intelligence, it follows that leadership would take the utmost care in selecting projects for their superstar employees to pursue. Right?

Right?

Well…maybe not…because in the case of the Deep Research Agent, it kind of looks like the exact same product was built and open-sourced by a research team at Stanford over a year ago.

Here’s a description of Stanford’s STORM product from its GitHub page:

STORM is a LLM system that writes Wikipedia-like articles from scratch based on Internet search. Co-STORM further enhanced its feature by enabling human to collaborative LLM system to support more aligned and preferred information seeking and knowledge curation.

While the system cannot produce publication-ready articles that often require a significant number of edits, experienced Wikipedia editors have found it helpful in their pre-writing stage.

More than 70,000 people have tried our live research preview.

From the Stanford Storm Team A product that conducts internet research and builds Wikipedia-style documents with full citations. More than 70,000 users. Used by experienced Wikipedia editors…

This is the same thing as Deep Research Agent! I know that the some of you reading this might list out slightly different features or whatever, but come on: from the standpoint of novel technological achievement, this feels like the exact same thing. It’s LLMs that take inputs and trigger web searches and then create a reliable output. I’m not saying that it isn’t cool, I’m just saying that it has already been done.

Think back to the Deep Research Agent announcement, which read, “The ability to synthesize knowledge is a prerequisite for creating new knowledge. For this reason, deep research marks a significant step toward our broader goal of developing AGI, which we have long envisioned as capable of producing novel scientific research.”

One more time. Deep research marks a significant step toward our broader goal of developing AGI.

I don’t know, man. I don’t know.

The thing you need to keep in mind about 2025 is that nothing matters more for a business than having peoples’ attention online. This is especially true in competitive industries without much product differentiation like today’s Large Language Model industry (Google, XAI/Grok, OpenAI, Anthropic, Meta, DeepSeek, open-source options, and more). This is why social media influencers are worth so much in the world of marketing: people pay attention to influencers and so influencers can be used to advertise.

OpenAI has a huge following. Everything that they do generates a big buzz both on social media and in mainstream news outlets. Is the Deep Research Agent the start of a trend where OpenAI just copies what’s being built with their models out in the wild, just to keep the media buzz alive and keep investors lining up?

You can’t fault the strategy for a money-making enterprise! I’d love to be able to pull this off too. It’s just not what you’d expect from a nonprofit founded to cure all diseases and push humanity to the stars.

However…

A new look

There’s something important to note about OpenAI’s Deep Research Agent announcement that we haven’t touched on yet. After describing what Deep Research Agent is, the announcement goes on to say that DRA is “Powered by a version of the upcoming OpenAI o3 model that’s optimized for web browsing and data analysis.”

This is a subtle yet interesting point that is worthy of consideration. To break it down, it’s important to first talk about how Large Language Models like the ones that OpenAI builds are fundamentally closed off from the internet.

Think back to middle school algebra class for a second. Imagine this question on your math homework:

Consider the function f(x) = x + 2. What is the result of f(3)?

The answer to the question is 5, because we’re plugging 3 into the equation x + 2, and 3+2 is 5.

Now imagine these are the next two problems on your homework:

What is the result of f(4)? What is the result of f(5)?

Did you get 6 and 7? If so, we’re rolling.

Now, what if this was the last question:

What is the result of f(search google for “Best FanDuel bets tonight”)?

Maybe a mathematician will correct me, but this last problem doesn’t make much sense.

The same thing goes for Large Language Models. Conceptually, LLMs are just giant, complex mathematical functions that take an input and return an output, but the inputs and outputs are representations of human text and speech.

The reason that this is so important to keep in mind is that Large Language Models can’t actually search the internet—again, they’re just equations that take inputs and return outputs—but if you combine their outputs with something that can search the internet, say, for example, a traditional program like a search engine, you can stack the AI and non-AI pieces together like Legos and create something that is no-longer closed off to the internet. The industry has started calling these things “Agents.”

Most of what OpenAI has been trying to do up to this point is build models that are meant to answer people’s questions (which is exactly what we all want from tools like ChatGPT). But now (if what they said in the Deep Research Agent announcement is true) it sounds like OpenAI has started to optimize new models for tasks other than good Q&A responses, perhaps for doing things like generating good internet search keywords. For example, if you asked the question:

“What are the best stocks to buy in 2025?”

An old model might respond with something like:

Identifying the best stock to buy in 2025 involves analyzing current market trends, company financials…,

Whereas one of these new, specialized models might be more likely to respond to the same question with:

{

"action": {

"type": "Web search",

"searchTerms": ["Stocks","2025","Bloomberg"]

}

}

The latter response is obviously incomprehensible to a person, but that’s the point: it’s not meant for human consumption. A response like this is meant to be parsed by a program (i.e. not AI, just regular old code), and executed as an internet search, and then the results would be collected (via web scraping or other methods), and then potentially passed to another, more ChatGPT-like model to be assembled into a comprehensible response.

What we can glean from this is that if OpenAI is transitioning from a pure focus on training massive, general-purpose, human-facing style models to specialized models that are meant to dovetail with non-AI programs, such as programs that conduct internet searches, that maybe OpenAI feels like it no longer needs to push the frontiers of its flagship models to achieve artificial general intelligence and is instead shifting to building infrastructure to more easily connect their models with the outside world. In other words, maybe they feel like the brain has successfully been built, and now it’s time to give it arms and hands.

If this is true, then maybe my earlier criticism is unwarranted, and this is exactly what OpenAI should be doing. There are a lot of ways in which this makes sense, the main one (in my mind) being that, as discussed earlier, these models are just isolated functions at their core, and without building them the boats and bridges necessary to escape their islands, they may never truly come “alive.”

OpenAI’s goal from the start has been to build Artificial General Intelligence. Who knows, maybe they’re as close as they say they are.

See you next week!